Week 1

Personally, I like to do some music creation and synthesiser use in my life, and after clarifying the objectives of this course, I chose the touchdesigner and virtual modular synthesiser works of pepepepebrick, an interactive artist on instgram, as my initial objectives

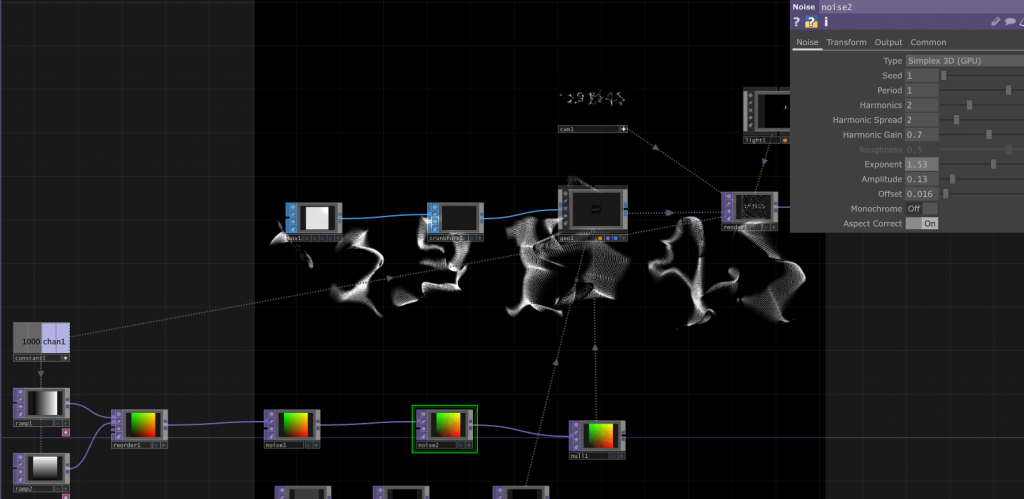

Through researching pepepebrick’s work, I learned about TouchDesigner , a node-based visual programming software for real-time interaction, generative art, projection mapping, and data visualisation. Ideal for creative programming, it can be used for, lighting control, immersive installations, etc. and supports interaction with a wide range of external hardware and software. This deepened my desire to explore.

For my iterative project, I tried to explore the link between sound and picture using a combination of touchdesigner and a virtual modular synthesiser. However, I soon realised that my limited knowledge of interactive software made it difficult to progress with this approach. So I chose to iteratively experiment with some relatively rudimentary sound and picture interaction work by another artist, DS_TD.

Tools / Craft

Although I had never used an interactive type of app like touchdesigner before, I found that the logic of his operation was not as obscure as the code.

However, with so many options and settings, I decided to start with a basic tutorial to familiarise myself with the software.

It took me a few more days to learn the basic workflow of touchdesigner and the common components on YouTube, which helped me to get started with the software.The memory of TouchDesigner’s node functionality was really difficult for me. The nodes in TouchDesigner have five main functions.

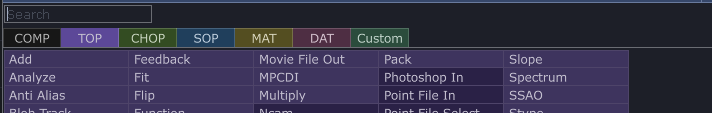

There are five main categories of nodes in TouchDesigner, TOP (Texture Operator), CHOP (Channel Operator), SOP (Surface Operator, MAT (Material Operator, DAT (Data Operator) . Each category has a different main purpose. During this learning process, I also try to use these nodes for different experiments.

Iteration

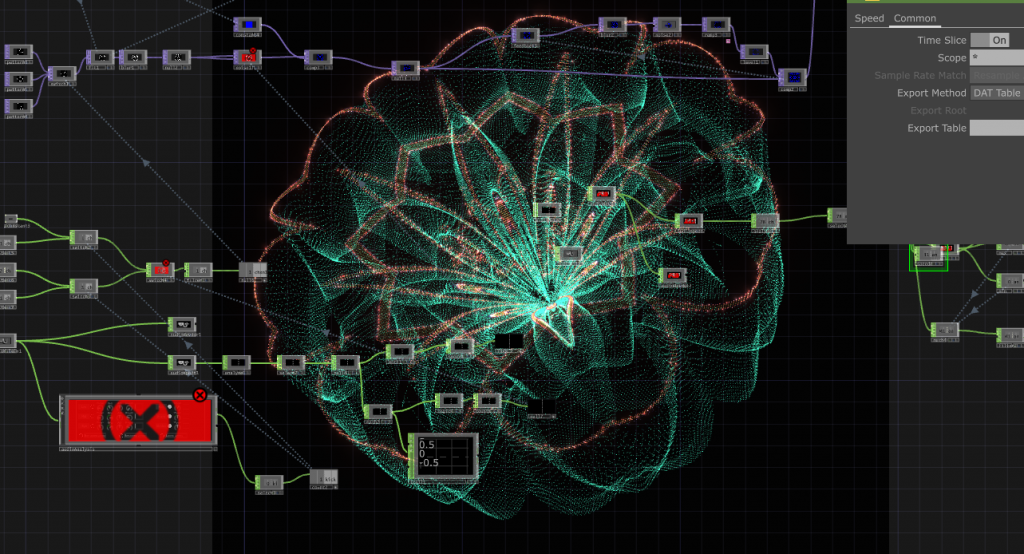

After going through the basic workflow of touchdesigner, I started working on DS_TD’s petal audio-visual interactive work.

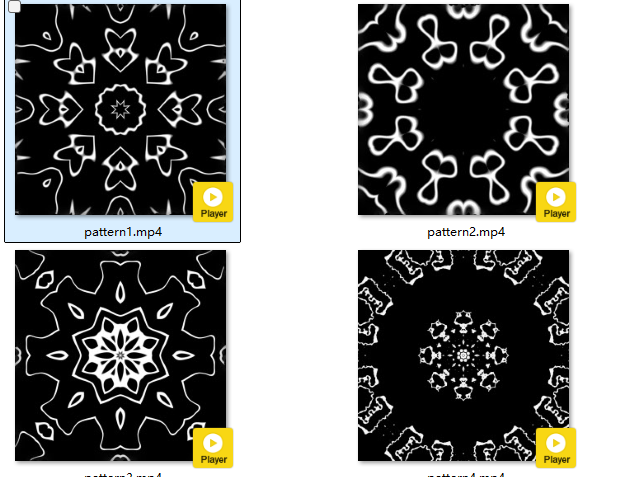

Originally I thought I could do the animation of the graphics directly in touchdesigner, but then I realised that this is basically impossible, so I had some time to do the ae kaleidoscope animation learning.

I was never able to achieve a very three-dimensional presentation of the degree of bloom of the petals, but I could never find the problem there, so I carried out the recreation of the image in ae, and again recreated the whole project to achieve a similar effect in the end.

Week 2

I started to explore more implementability of touchdesigner as interactive software by connecting it to my sampler sp404 and experimenting with on-screen variations of diversity through the control source of midi signals.

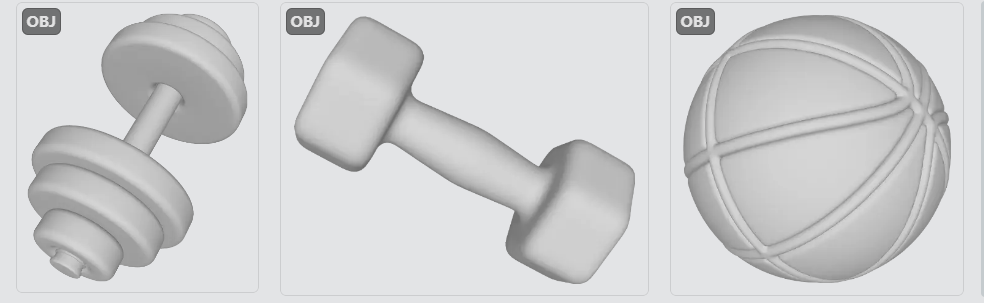

Considering the way images and layouts interact on the page, and how these setups map to the data. I chose figurative sound for the interaction, I travelled to the sports facilities at finsbury park to use my sampler for sound recording, recording basketball, badminton, running and sounds from the gym with the intention of processing them.

I was going for a relatively figurative creation along the same lines as DS_TD, so I attempted to model the objects that represented the different sounds I had recorded.

I don’t know why, but my modelled obj file always crashes every time I import it into touchdesigner. sad

In today’s world, the line between interactive and graphic design is becoming increasingly blurred. Traditional graphic design focuses on static visuals, while interactive design is all about data-driven, dynamic experiences. With tools like TouchDesigner, we can use sound to influence visual layouts, exploring new relationships between sound, imagery, and interactive interfaces.

How Does Sound Influence Interaction

During my second-week experiment, I realized that sound isn’t just something we hear—it can also be transformed into data that drives visual changes. For example, the bouncing of a basketball, the sound of a badminton racket hitting a shuttlecock, or the rhythmic steps of a runner can all be analyzed into different frequencies and amplitudes. These sound properties can then be mapped to visual elements, making the layout more fluid. Fonts, colors, and even the arrangement of images can all shift dynamically based on sound characteristics.

Traditional graphic design follows grid systems and composition rules, but through interactive experiments, I found that these principles can become more flexible with sound or user input. For instance, text spacing can expand or contract based on pitch, and page elements can move rhythmically in response to beats. This approach makes graphic design more than just a static visual—it becomes interactive and dynamic.

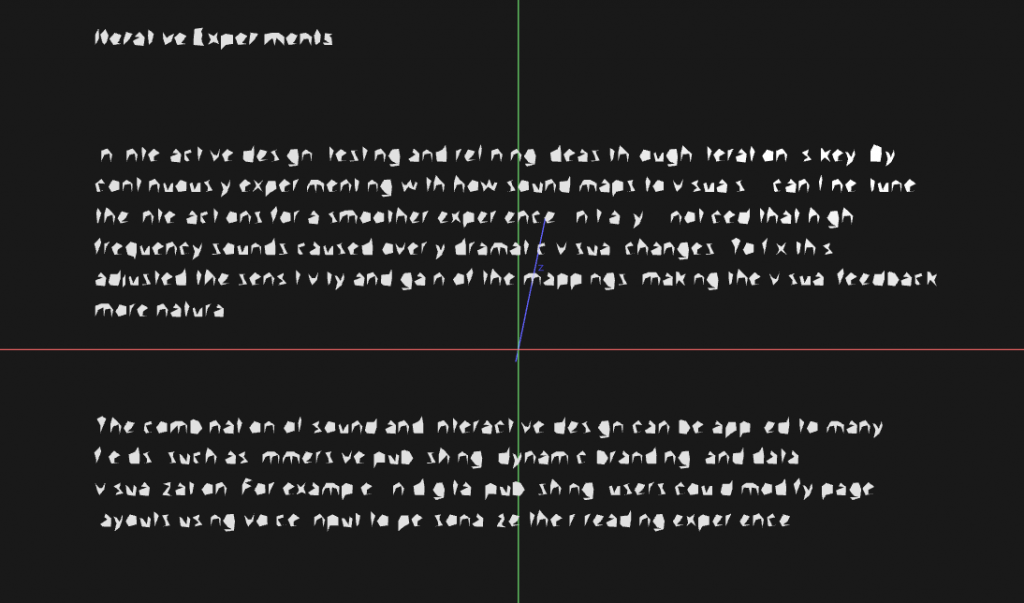

Iterative Experiments

In interactive design, testing and refining ideas through iteration is key. By continuously experimenting with how sound maps to visuals, I can fine-tune the interactions for a smoother experience. Initially, I noticed that high-frequency sounds caused overly dramatic visual changes. To fix this, I adjusted the sensitivity and gain of the mappings, making the visual feedback more natural.

The combination of sound and interactive design can be applied to many fields, such as immersive publishing, dynamic branding, and data visualization. For example, in digital publishing, users could modify page layouts using voice input to personalize their reading experience.

I used this idea to create dynamic posters for my friend dav’s new album as part of an iterative experimentation process

Week 3

about the writing

Leave a Reply